Introduction

As a JavaScript developer, one might know that Node.js is built on top of Chrome’s V8 Engine, giving us a way to run a JS program extremely fast by compiling it to machine code directly. This in a way gives V8 the role of an Operating System, running on top of your OS, similar to how you might imagine a Virtual Machine running.

At Rocket.Chat, our goal has always been towards making a fully customizable communications platform for organizations with high standards of data protection. One of the unique advantages we have is the ability to self-host it in an air-gapped environment. This allows our users to really own their data by keeping it safe inside their own infrastructure.

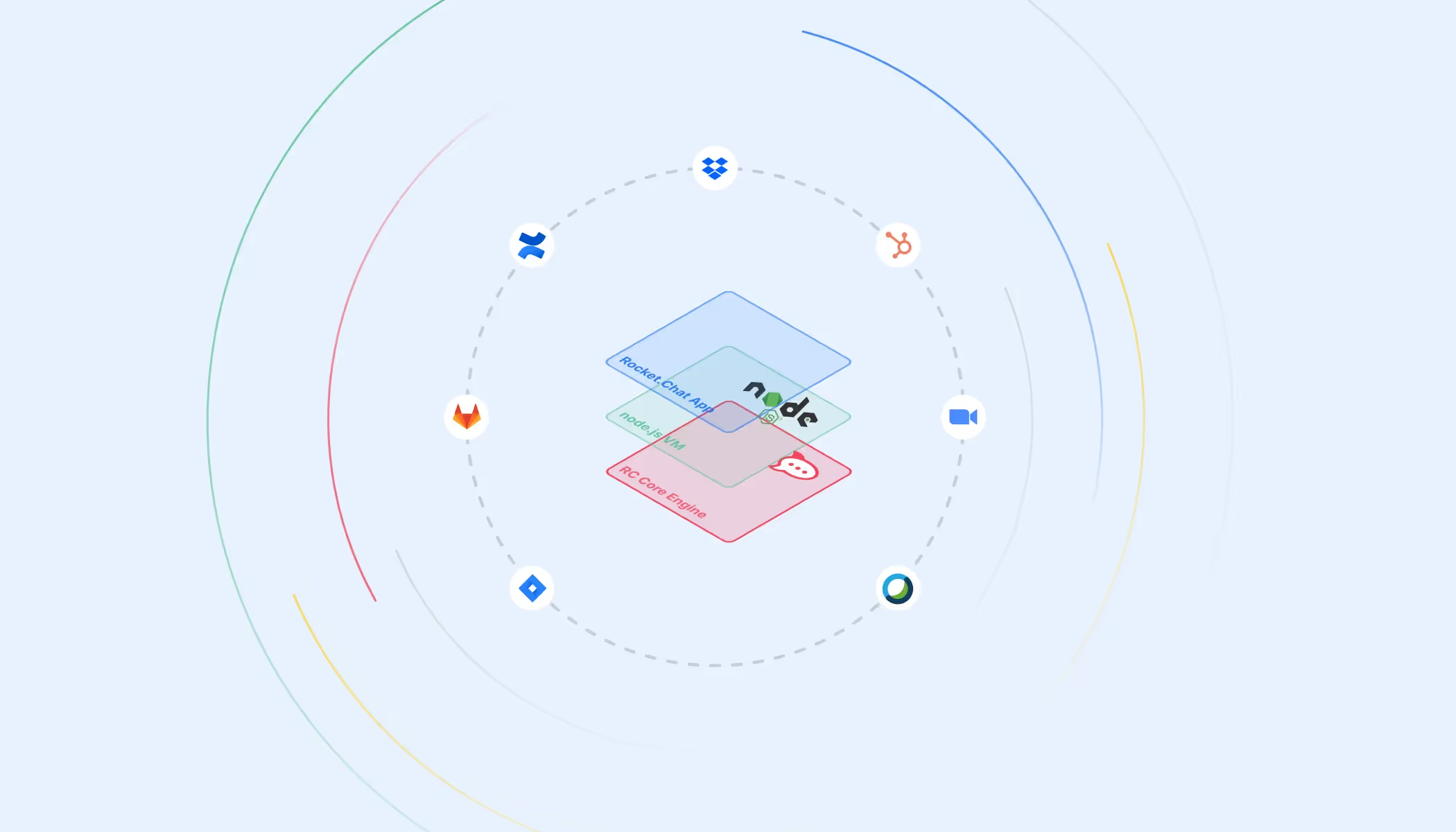

However, as our user base grew, we realized their need for the diverse pool of integrations in the workspace was going to increase maintenance complexity - from developing, reviewing, testing and making sure it kept working. So to address this (and some other similar things), we set out to take a step further on our platform's flexibility, with the introduction of the Apps Engine - our implementation of the Node.js VM module.

Exploring the Node.js VM module

A context here is basically an alternate environment that we’re creating with the use of this vm module. This environment is nothing but a bunch of key-value pairs, much like your shells, each directly running on top of the V8 engine.It might then be clear from this example, how the Node.js VM isolates the execution of the code it receives, from the global scope.

How we use the VM Module - Implementing our Apps Engine

The Apps-Engine is the framework that allows for the development of RC Apps ie., custom plugins that extend the functionality of the chat server. These apps allow for a tighter integration with whatever workflow our users, covering most, if not all, things that incoming/outgoing integrations and bots do. Interestingly, it has got some tricks of its own, like adding interactive buttons to messages and opening modals (check out Poll for a showcase!) and much more!

While developing this, we wanted all our workspaces to have access to Apps without needing to leave the comfort of their intranet. This prompted us to follow the rather uncommon path of having those apps running inside a Rocket.Chat server.

With that premise as a starting point, we knew we'd have to concern ourselves with keeping each of those apps running in an isolated scope inside the server, i.e. having no direct access to the server's functions or to the resources from other apps running alongside them. Hence, we could find the two important types of components we need in place for this to work:

- some bridges to safely connect apps to the core server's features, &

- a way to sandbox each app so they have their own exclusive context.

Given those points, the Node.js VM module for us sounded like the perfect approach to run any method within the App itself, while isolating it from the server’s scope. Although it sounds obvious, we had a lot of moving factors involved in it. So this implementation involved us figuring out various lifecycle methods for the loading, running, extending configurations etc.

Loading Apps

Rocket.Chat Apps are, at the end of the day, javascript classes. While loading an app, the first thing we need to do is to instantiate its class in the correct context. We do it like so:

This is just a common place for us to set the timeout and filename properties every time we call the VM. It's interesting to note that this new context for the method call here is different from the context we instantiated the App class in - which means they don't share any globals by default ie., the method call can't see any variable declared in the outer scope that is not a property of the App class, making the class a unique point of control for safety.

Other uses in the framework's internals

- Running an endpoint defined by an app;

- Running a slash command defined by an app;

- Running a processor for a job scheduled by the app;

Our learnings around the Node.js VM Module

As mentioned before, our main motivation to go for the Node.js VM module was isolating each app on its own scope, effectively preventing them from interacting directly with the host Rocket.Chat server or other apps, so they wouldn't be able to inject data and disrupt the system, or even leak data from other sources. Additionally, prevent us from the complexity of spawning, controlling and managing communication of external processes to run those logics.

For the most part, we can say that the Node.js VM module delivered on what it promises. Every execution of the app's internal code has its own context, and this context is controlled by the Apps-Engine itself. Of course, we needed to adapt and iterate a lot, as our first assumptions of how this interaction with the module would work were not exactly right.

However, our implementation has some shortcomings. One of them is the fact that, by isolating the apps that much, we take away some key modules that are very familiar to Node.js devs, which makes our environment feel "alien" - it's still typescript, but the tools are now unknown.

Finally, the hardest lesson was that the vm does not prevent ALL kinds of interactions with the outside world, and it is still possible to escape the sandbox if you really want to. It is now stated in the Node.js docs itself (Glad they added it!). We do circumvent this in our Marketplace with the approval process, as we evaluate the source code of apps before allowing them to be published, but as we increase the support for NPM modules this might become unfeasible.

Hence, there are some tough choices we have to make moving forward.

The Future of the Node.js VM in the Apps-Engine

From our experience in experimenting around with different apps on Rocket.Chat, it's clear that we need to improve our approach to isolating apps in the future. This doesn't mean just improving the security aspect of the product, but also increasing the flexibility and capabilities of the Apps Engine as a whole. However, we're not sure we'll be able to move forward with our current use of the Node.js VM. There are some alternatives we've been discussing around:

- The vm2 NPM package, as its premise, is allowing a secure sandbox for running untrusted code with an API similar to the native Node.js module. Although we need to first investigate this further, but as it sounds, this would allow for a simpler transition in the framework's source code; basically changing `vm` to `vm2` 🤔

- Running the Apps-Engine as a totally separate process, by using Node.js's own native modules to fork an isolated process. This would require a much bigger effort to achieve, as we would essentially need to create a process manager to control resources and the communication between the Rocket.Chat process and the Apps' processes. But this would mean achieving true isolation.

- Another option we're evaluating is to make the framework act more like a serverless function platform. This is kind of a specialization of the alternative above, which would also include an overhaul of the Apps-Engine API for it to be more similar to those of Function-as-a-Service offerings. Arguably the API would be more familiar and idiomatic to today's code patterns.

Being open source, the community often recommends us many solutions here and there, adding to the advantage of having multiple eyes on the project! This discussion still needs to mature a lot, with us potentially running a few proofs of concept to reach a final decision (contributors are welcome!). Introducing this new "layer" between the framework and the host server will likely add an overhead to this operation, both in runtime and maintenance, and we'll need some experimenting to make a well informed choice

Frequently asked questions about <anything>

- Digital sovereignty

- Federation capabilities

- Scalable and white-labeled

- Highly scalable and secure

- Full patient conversation history

- HIPAA-ready

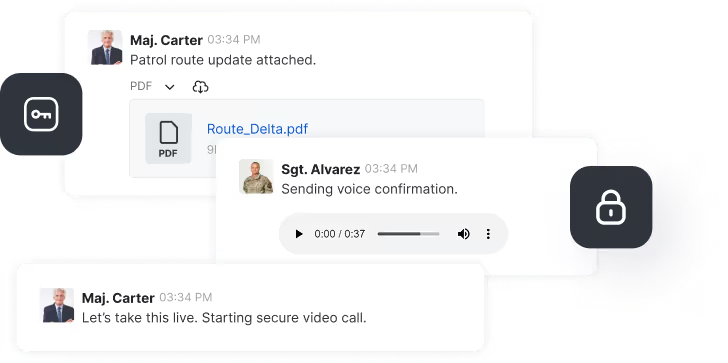

for mission-critical operations

- On-premise and air-gapped ready

- Full control over sensitive data

- Secure cross-agency collaboration

%201.svg)

- Open source code

- Highly secure and scalable

- Unmatched flexibility

- End-to-end encryption

- Cloud or on-prem deployment

- Supports compliance with HIPAA, GDPR, FINRA, and more

- Supports compliance with HIPAA, GDPR, FINRA, and more

- Highly secure and flexible

- On-prem or cloud deployment

.avif)